This repository provides a Dockerfile to run inceptionism/DeepDream and "A Neural Algorithm of Artistic Style" with the following repositories.

The following examples were created with create-outputs.sh and show an input content image followed by the DeepDream content image, and then pairs of input artistic styles and the DeepDream content image with the style applied. See the gallery for more examples.

Tweet the style and source image to

@brandondamos

with the hashtag #DreamArtRequest and I'll manually

generate images for you.

If there's enough interest, I'll create a bot to

automatically process images with #DreamArtRequest

on my server.

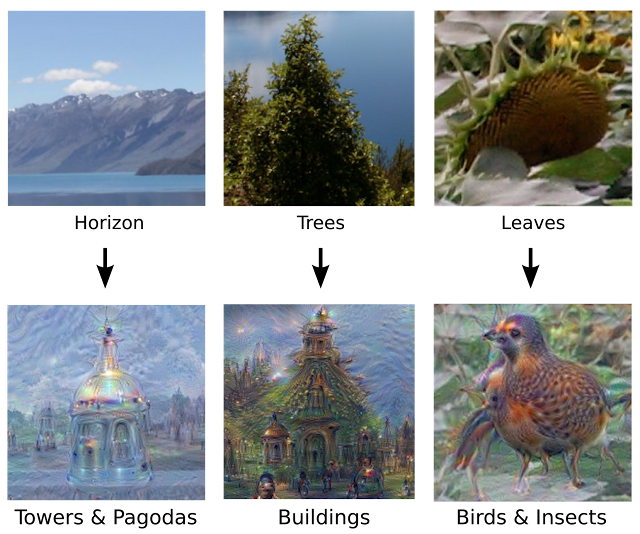

Inceptionism is introduced in Google Research's blog post and visualizes an artificial neural network by enhancing input images to elicit an interpretation. The following example from the blog post. See the original gallery or #DeepDream for more examples.

The current implementations are google/deepdream which uses Caffe and eladhoffer/DeepDream.torch which uses Torch.

'A Neural Algorthm of Artistic Style' is a paper by Leon Gatys, Alexander Ecker, and Matthias Bethge released as a preprint on August 26, 2015. The current implementations are jcjohnson/neural-style and kaishengtai/neuralart, which both use Torch.

Nontrivial toolchains and system configuration make the barrier to running current implementations high. This repository lowers the barrier by combining DeepDream.torch with neural-style into Torch applications application that share the same model and run on the CPU and GPU.

Ideally, both of these implementations should ship on luarocks and provide a command-line interface and library that nicely load the models. When they do, this repository will be obsolete, but until then, this repository glues them together and provides a Dockerfile to simplify deployment.

Some portions below are from jcjohnson/neural-style.

Clone with --recursive or run git submodule init && git submodule update

after checking out.

Run ./models/download_models.sh to download the original

VGG-19 model.

Leon Gatys has graciously provided the modified version of the VGG-19

model that was used in their paper;

this will also be downloaded.

By default the original VGG-19 model is used.

This model is shared between DeepDream and NeuralStyle.

DreamArt can be deployed as a container with Docker for CPU mode:

./models/download_models.sh

sudo docker build -t dream-art .

sudo docker run -t -i -v $PWD:/dream-art dream-art /bin/bash

cd /dream-art

./deepdream.lua -gpu -1 -content_image ./examples/inputs/golden_gate.jpg -output_image golden_gate_deepdream.png

./neural-style.sh -gpu -1 -content_image ./golden_gate_deepdream.png -style_image ./examples/inputs/starry_night.jpg -output_image golden_gate_deepdream_starry.png

To use, place your images in dream-art on your host and

access them from the shared Docker directory.

Dependencies:

Optional dependencies:

- CUDA 6.5+

- cudnn.torch

See ./deepdream.lua -help and ./neural-style.sh -help for the most updated docs.

dream-art(master*)$ ./deepdream.lua -help

-content_image Content target image [examples/inputs/tubingen.jpg]

-gpu Zero-indexed ID of the GPU to use; for CPU mode set -gpu = -1 [0]

-num_iter [100]

-num_octave [8]

-octave_scale [1.4]

-end_layer [32]

-clip [true]

-proto_file [models/VGG_ILSVRC_19_layers_deploy.prototxt]

-model_file [models/VGG_ILSVRC_19_layers.caffemodel]

-backend nn|cudnn [nn]

-output_image [out.png]

dream-art(master*)$ ./neural-style.sh -help

-style_image Style target image [examples/inputs/seated-nude.jpg]

-content_image Content target image [examples/inputs/tubingen.jpg]

-image_size Maximum height / width of generated image [512]

-gpu Zero-indexed ID of the GPU to use; for CPU mode set -gpu = -1 [0]

-content_weight [5]

-style_weight [100]

-tv_weight [0.001]

-num_iterations [1000]

-init random|image [random]

-print_iter [50]

-save_iter [100]

-output_image [out.png]

-style_scale [1]

-pooling max|avg [max]

-proto_file [models/VGG_ILSVRC_19_layers_deploy.prototxt]

-model_file [models/VGG_ILSVRC_19_layers.caffemodel]

-backend nn|cudnn [nn]

- How long does it take to process a single image? This depends on the neural network model, image size, and number of iterations. On average, DeepDream takes about 5 minutes and the art takes about 15 minutes on a GPU.

- How much memory does this use? This also depends on the neural network model, image size, and number of iterations. On average, both consume about 4GB of memory.

All portions are MIT licensed by Brandon Amos unless otherwise noted.

This project uses and modifies the following open source projects and resources. Modifications remain under the original license.

| Project | Modified | License |

|---|---|---|

| DeepDream.torch | Yes | MIT |

| jcjohnson/neural-style | No | MIT |